Integrate Workbench with Databricks

These instructions describe how to configure the Databricks integration in Posit Workbench.

Both Azure Databricks and Databricks on AWS are supported.

Advantages of Workbench’s integration for Databricks

Workbench has a native integration for Databricks that includes support for managed Databricks OAuth credentials and a dedicated pane in the RStudio Pro IDE. For more information, review the Databricks in RStudio Pro guide.

The Databricks integration allows end users to sign into a Databricks workspace from the home page and, using their existing identity, be immediately granted access to data and compute resources.

Workbench-managed credentials have several security and usability advantages over user-managed credentials, and we recommend them whenever possible:

- Users arrive in a session to find that the Databricks CLI and most official SDKs and database drivers for Python and R work without needing a separate step to configure credentials.

- Anything that implements the Databricks client unified authentication standard will pick up the ambient credentials supplied by Workbench.

- See the Databricks client unified authentication documentation for additional information.

- Users do not need to manage sensitive, long-lived Databricks personal access tokens (PATs) to have individually scoped permissions.

- Administrators can grant or revoke granular permissions for individuals directly through their Databricks account.

Requirements

Currently, this feature is only supported for RStudio Pro and VS Code sessions.

Before you begin, you must:

- Ensure that end users are using HTTPS to access Workbench. For security reasons, web browsers do not allow the required OAuth flows to take place without HTTPS in place. For additional information, see the Mozilla Developer - Secure contexts documentation.

- Enable the Job Launcher with the

launcher-session-callback-addresssetting configured correctly. See the Job Launcher section for more information. - For Databricks on AWS, have a Databricks administrator available to create a custom OAuth app integration for Workbench.

- For Azure Databricks, have an Azure administrator available to create Azure EntraID resources and configure your Databricks instance.

Databricks configuration

Workbench requires a dedicated OAuth client on the Databricks platform to manage Databricks credentials, and the steps to configure this client vary by cloud provider.

Azure Databricks

For Azure Databricks, you need to:

- Register an app in Entra ID to use as a service principal in Azure Databricks by following the Microsoft - Manage service principals documentation.

- Configure that same app with API permissions to access Azure Databricks using the Microsoft - Configure an app in Azure portal documentation.

- Record the client ID and secret during this process and ensure that the Entra ID app’s redirect URLs include:

https://<my-workbench-url>/oauth_redirect_callback

Databricks on AWS

For Databricks on AWS, before you begin:

- A Databricks administrator needs to register a custom OAuth app integration for Workbench. Reference the Create Custom OAuth App Integration Databricks API documentation.

- There is no Databricks UI for this, so you must use the Databricks CLI. Follow these official guides to:

After you’ve completed the steps above:

Use the CLI to create the integration:

Terminal

$ databricks account custom-app-integration create \ --json '{"name":"posit-workbench", "redirect_urls":["https://<my-workbench-url>/oauth_redirect_callback"], "scopes":["all-apis","offline_access"]}'The output should be similar to the following:

{"integration_id":"<integration-id>","client_id":"<client-id>","client_secret":"<client-secret>"}Record the client ID and secret (which may be empty).

Should you need to delete or disable this integration in the future, use the Databricks CLI as follows:

Terminal

$ databricks account custom-app-integration delete <integration-id>Workbench configuration

By default, all Databricks integrations are disabled and must be enabled.

Enable Databricks integrations by adding the following to

rserver.conf:/etc/rstudio/rserver.conf

databricks-enabled=1Restart any running sessions for this change to take effect.

Workbench supports connecting to multiple Databricks workspaces, even across multiple cloud providers. Many organizations have only one Databricks workspace, but others may have production and non-production workspaces or workspaces dedicated to a specific line of business.

Configure available Databricks workspaces in the /etc/rstudio/ directory in a databricks.conf file. Below is an example of what this file could contain:

/etc/rstudio/databricks.conf

[production]

url=https://our-organization.cloud.databricks.com

client-id=12345678-abcd-1234-4567-abcdef123456

[aws-staging]

name=Staging (AWS)

url=https://our-organization-staging.cloud.databricks.com

client-id=12345678-abcd-1234-4567-abcdef123456

client-secret=98765432-dcba-4321-7654-987654fedcba

[azure]

name=Production (Azure)

url=https://databricks-workspace.azuredatabricks.net

client-id=12345678-abcd-1234-4567-abcdef123456

client-secret=98765432-dcba-4321-7654-987654fedcbaAzure Databricks workspaces are detected automatically when the url ends with one of the following:

azuredatabricks.netdatabricks.azure.usdatabricks.azure.cn

Each [header] names a section with the following properties:

| Key | Value | Description | Required |

|---|---|---|---|

url |

URL | The URL of a Databricks workspace that services your organization. | Yes |

client-id |

string | The client ID of the OAuth app created for Workbench. See Databricks configuration above. | Yes |

client-secret |

string | The client secret of the OAuth app created for Workbench. See Databricks configuration above. | Not always |

name |

string | An optional user-friendly name for this Databricks workspace. If not specified, Workbench will use the section header. | No |

The client-secret key is required for all Azure Databricks configurations and for Databricks on AWS if the custom OAuth app integration was created with the confidential flag.

Reload the Workbench service for the changes to take effect:

Terminal

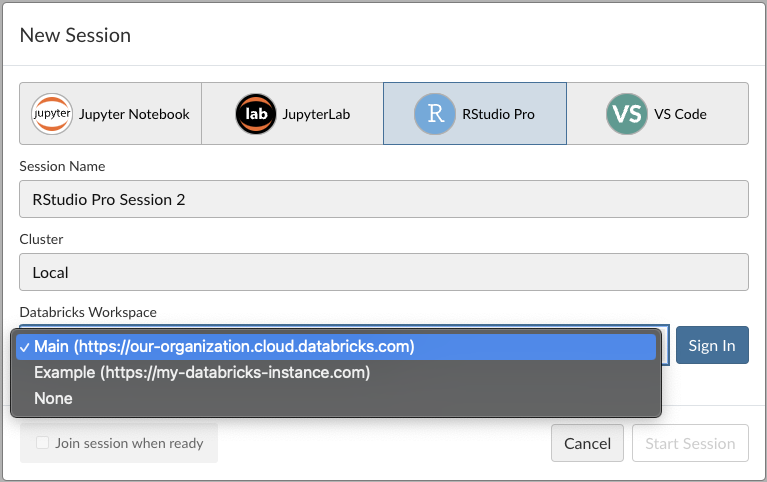

$ sudo rstudio-server reloadVerify that the Databricks configuration has been picked up correctly by logging into the homepage and clicking New Session.

Databricks hosts in session launcher dialog

Workbench-managed credentials automatically refresh when sessions are actively using them.

Troubleshooting

Permission errors:

- This integration relies on a properly configured OAuth client to work. When encountering permissions errors during the Databricks Sign-in flow from the home page, verify that the redirect URL is correct (and includes the schema) on the Databricks side and that the

client-idandclient-secret(if applicable) set in thedatabricks.conffile has been correctly copied from the OAuth app.

Databricks-related environment variables:

- End users configuring their own Databricks-related environment variables for a session may override or interfere with Workbench-managed credentials. We suggest that users who manage their own credentials select the

Noneoption for the Databricks workspace when launching sessions from the home page, which disables Workbench-managed credentials for that session.